11 Nov 2024

NP: We did it! ... It's installed and ready for the Austin Studio Tour event this coming weekend. This is the last post before then. Here are some test videos we made, I had to sync one of them manually (which would explain the lag) ... huge thanks to everyone involved in making it this far!

9 Nov 2024

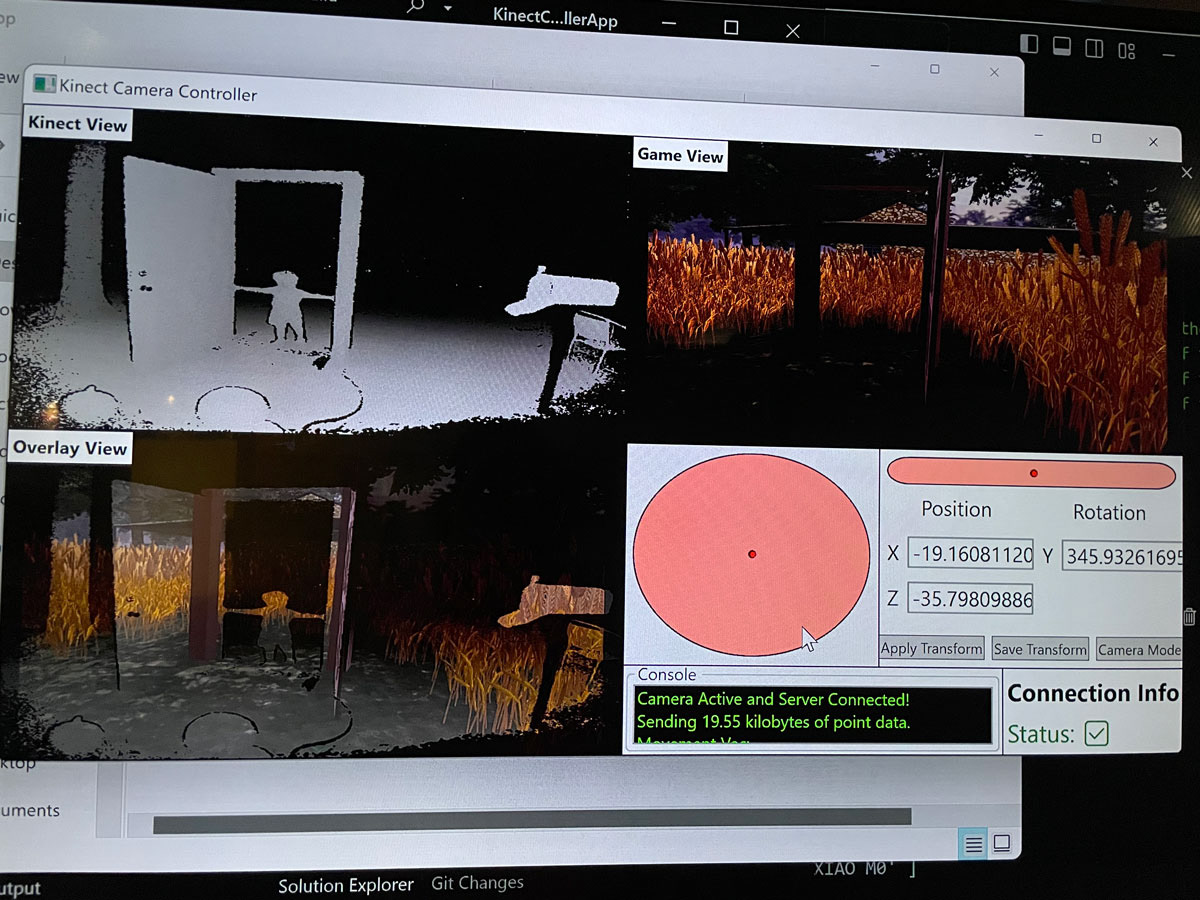

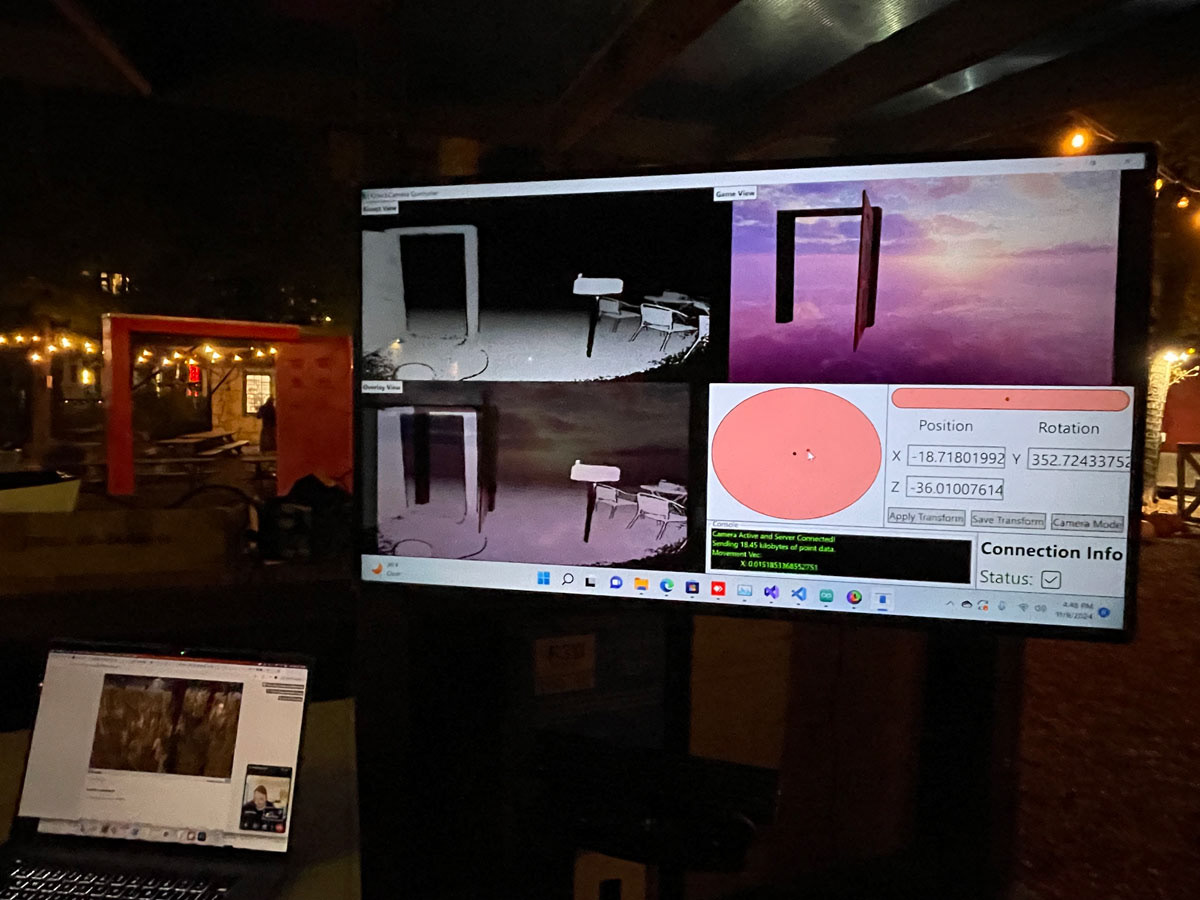

NP: More testing onsite today. All of Isaac's work on the camera app has really paid off, it was fantastic to see it working! Such a smart way to align the camera between the different views. There was some troubleshooting, esp. sending data to the Arduino but it got worked out in the end. All the credit goes to Isaac!

8 Nov 2024

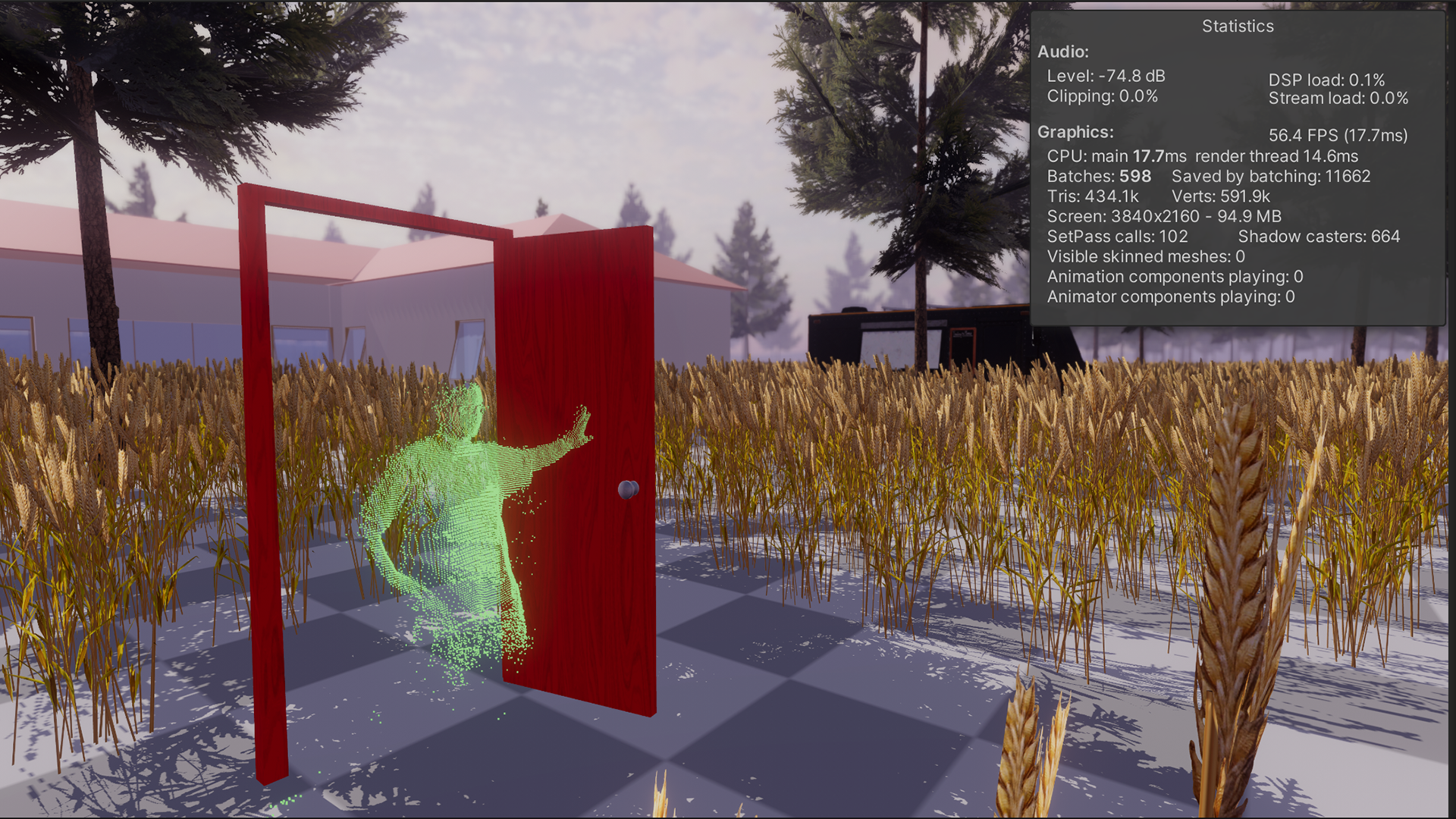

NP: We had issues connecting to the WiFi but now have a dedicated mobile hotspot. This was a quick test with the Kinect tonight ... we've done these before but great to see it with the real door in the background! more tomorrow ...

7 Nov 2024

NP: Jon helped us install the door today irl!

3 Nov 2024

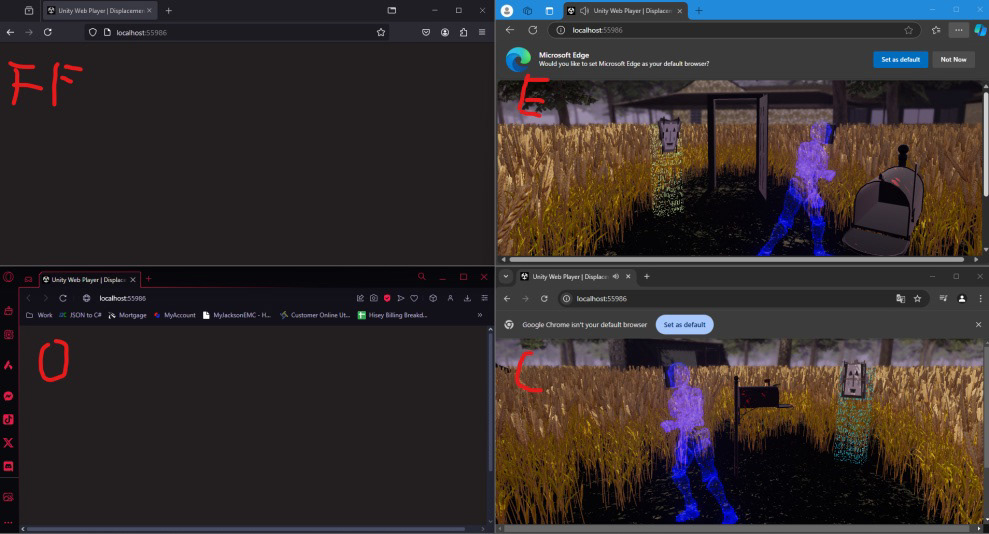

IH: Face to face!

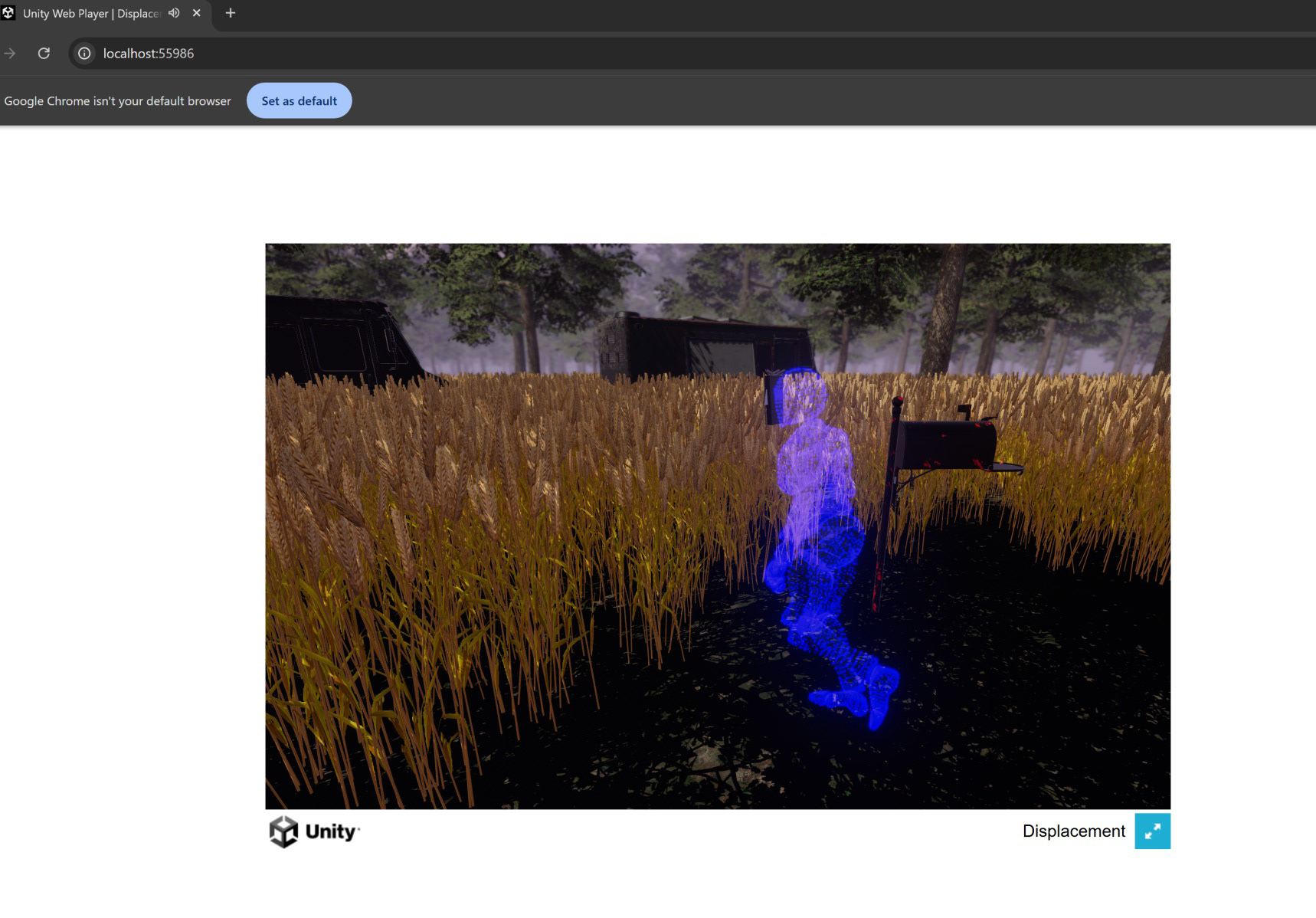

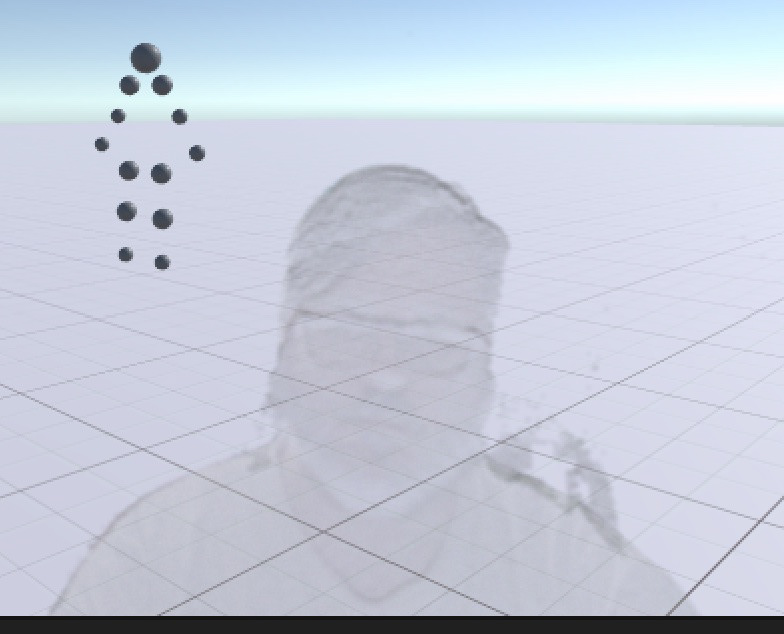

[Experimenting with point cloud avatars] ... Yikes, the VFX graph doesn't work in WebGL, however WebGPU seems to be like a bleeding edge new thing. I upgraded the Unity version and experimenting with that now ... Sadly doesn't work in my Opera GX browser but works in Chrome and Edge. Not working yet in Firefox and Opera ...

28 Oct 2024

NP: The environment has really come alive. The combination of the background sound of the birds/wind and the lights has made it feel magical. Isaac is building the multi-player particle system and this work-in-progess is already so atmospheric ...

25 Oct 2024

NP: Huge milestone reached today!!! ... Isaac got everything working and we now have the virtual > physical interaction set up. This means that if a virtual visitor (in the Unity environment) comes within a certain proximity of the (virtual) doorway, the physical door lights up. It's pretty cool! Check out the video below where Isaac explains the process:

21 Oct 2024

IH: Me as a point cloud ...

20 Oct 2024

NP: Made more progress this week. I painted the door and John-Mike installed the lights today. We'll test the virtual > physical interaction with Isaac this week.

14 Oct 2024

IH: We DID IT!!

Kinect Camera Controller is pretty much ready to go!

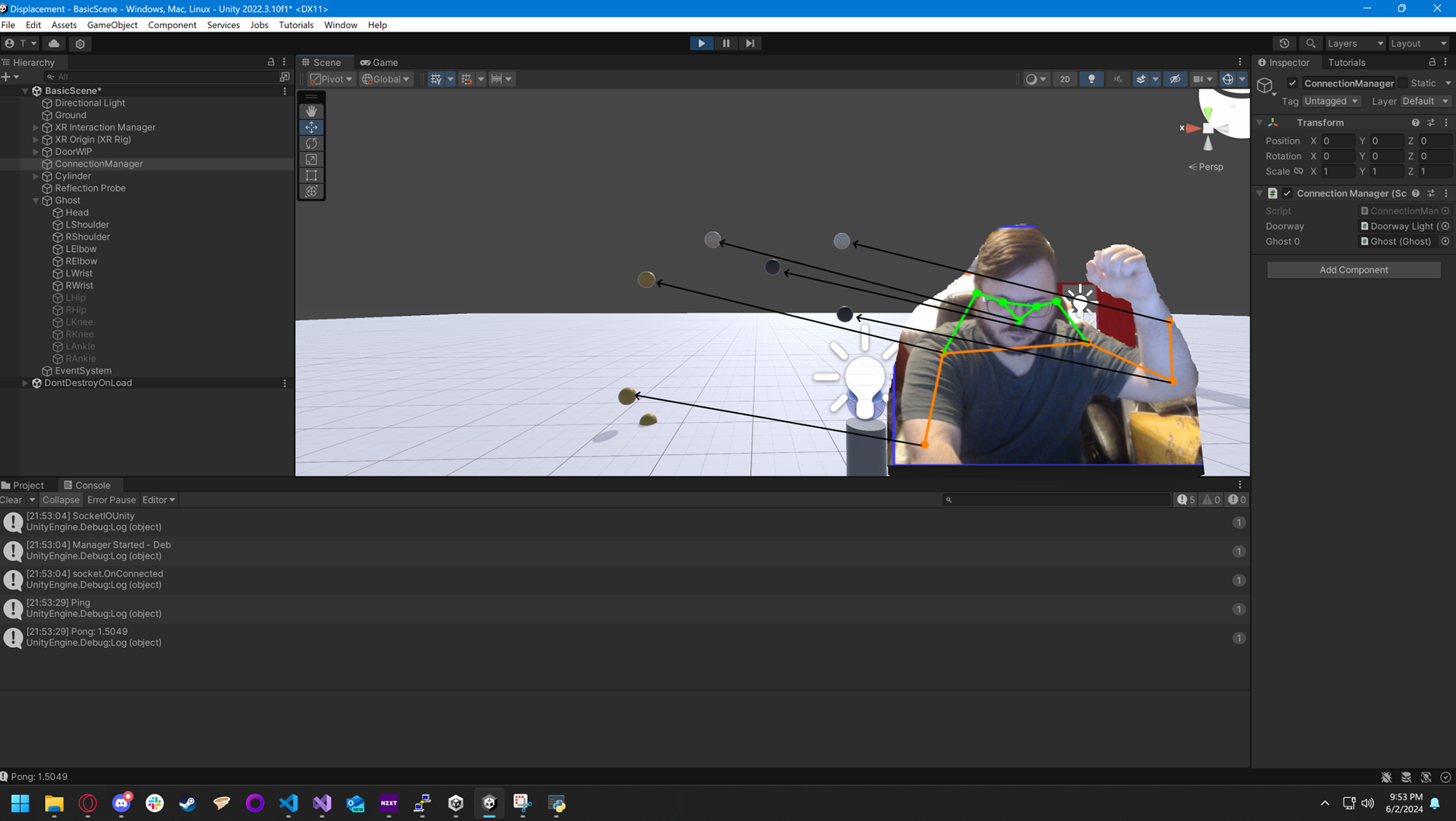

This video demonstrates a Kinect camera controller integration in Unity. There are two camera modes: the player camera, allowing interaction with a player character, and the Kinect camera view, used primarily by project admins. When in the Kinect view, player inputs no longer affect the avatar, and the avatar isn't displayed to others. The Kinect camera in Unity has been set up with real-world depth camera intrinsics, ensuring its view aligns perfectly with the physical Kinect camera. Two sliders control the XZ movement and rotation of the Kinect camera, allowing for more intuitive, rotation-based movement rather than axis-based.

The goal is to export and align the Kinect camera's view with the Unity game view, so objects in both physical and virtual spaces line up precisely. For instance, if a person stands under a doorway in the physical world, their point cloud will align with the virtual doorway in Unity. This is a critical milestone for the project, which is now close to launch. Further work involves refining physical interactions, scene details, textures, and lighting.

13 Oct 2024

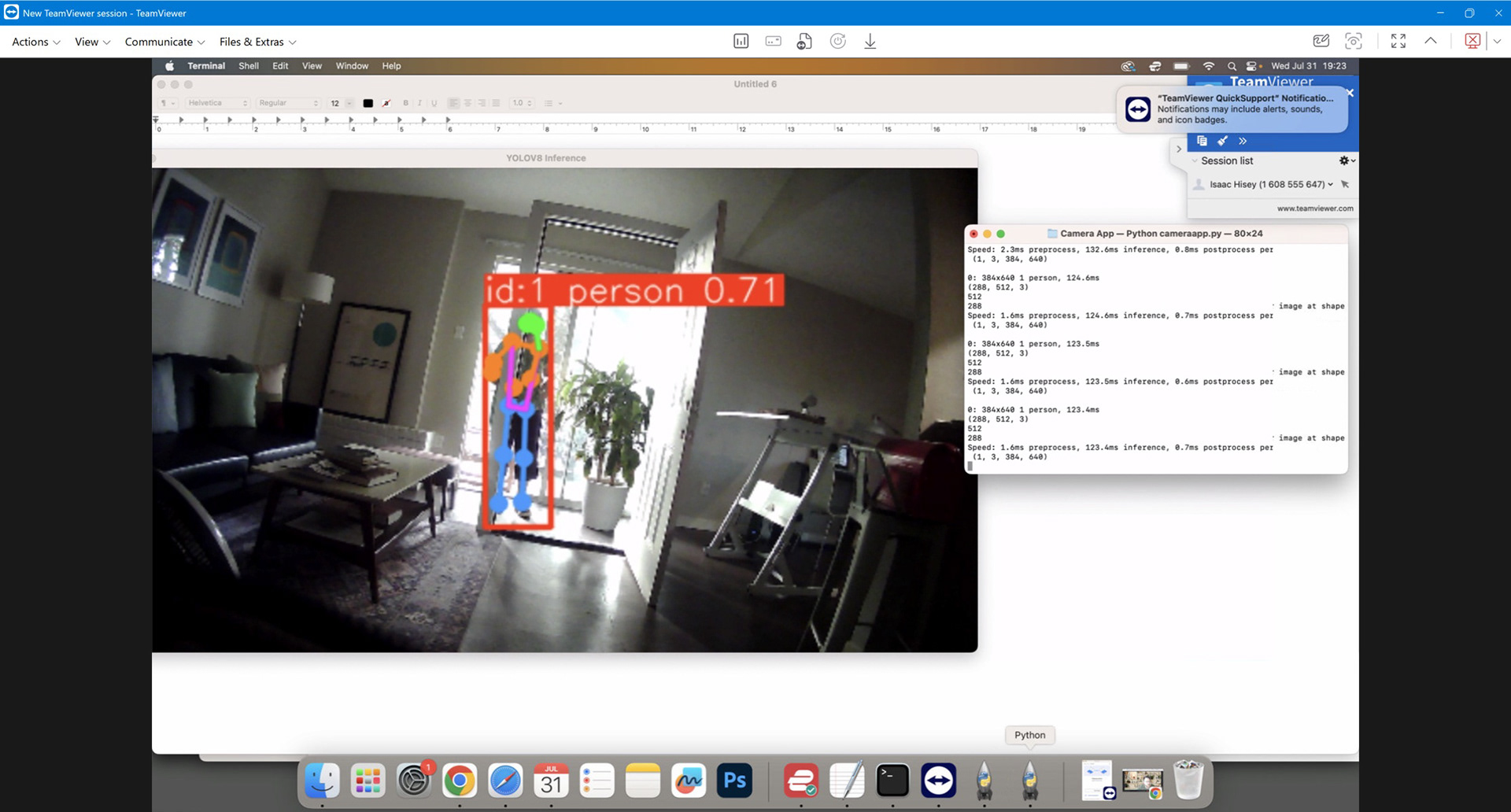

IH: The three videos below describe the Camera App and walk through the application’s purpose, importance, and technical structure, focusing on real-time camera synchronization in virtual environments.

Video 1: "What the Application Does" Summary:

This video introduces the "Connect Camera Controller" app, which allows users to modify the position and rotation of a virtual Kinect camera in real time across all clients. This enables the alignment of the virtual Kinect camera with a physical camera, ensuring that movements in the physical space (like walking through a doorway) are reflected accurately in the virtual space via a point cloud. I explain that while you might think this setup could be pre-configured, the app is necessary for real-time adjustments during presentations or unexpected shifts.

Video 2: "Why the Application is Necessary" Summary:

This video explains why the app is necessary, despite the possibility of setting initial conditions manually. The app addresses real-time adjustments needed during presentations or due to changes in physical conditions (e.g., wind misaligning the camera). Without the app, fixing issues would require updating and rebuilding the Unity project, which is time-consuming. Instead, the app allows quick adjustments that propagate instantly to all clients without the need for a full rebuild. The app’s ease of use makes it invaluable, despite the extra effort needed to develop it.

Video 3: "How the Application Works (Technical Overview)" Summary:

This detailed technical breakdown covers the design and functionality of the app. I explain how the Kinect camera's position and rotation, initially controlled by Unity clients, had to be shifted to the server for better synchronization across all clients. The server controls the camera’s X, Y, and Z position, sending minimal data to reduce bandwidth usage. The app also compresses point cloud data to optimize performance, ensuring responsive real-time updates, even on slower internet connections like 3G or 4G. It allows for the easy control of camera movements and ensures that only necessary data is sent across the network, improving efficiency.

7 Oct 2024

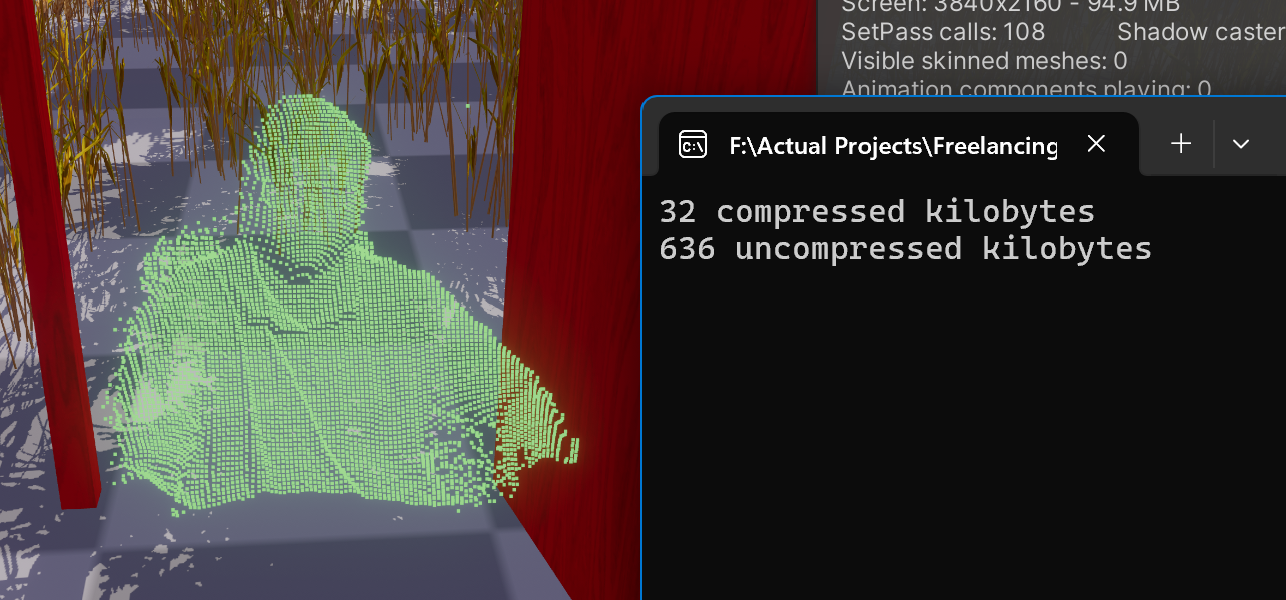

IH: Played around more with lighting, post processing, skybox and trees. Further compressed the data by lowering the resolution of points we are sending through. I think this is a good balance between detail and size, got it down from roughly 1.3 mb per sec per client to 0.3 mb per sec per client ... Next I'm gonna try to finish up the camera controller software as that will be critical to making a good experience and being able to change things up on the fly if needed.

6 Oct 2024

JS & IH: There were some challenges importing the assets into the virtual environment template, but this is mostly now resolved. There's still quite a bit of fine-tuning needed but so exciting to see it come together -

29 Sep 2024

IH: Another huge milestone today, we have point cloud generation with data sent remotely over the network from NP's location in TX, to IH in GA - check out the video below -

28 Sep 2024

IH: That was a lot of work!! So many technical issue to overcome but we now have the ability to load an HTML5 Itch.io build of a game: Grab the Kinect Color Camera, then in the bottom left is a merge of those two.

More to do: Implement controls in the bottom right to send data to the server to move the Kinect camera around. Then we can use the controls and overlay to make sure the Camera is aligned as we expect it to be ... I have some concerns as to how that will work given factors like scaling but that's the idea anyway.

14 Sep 2024

IH: Good News!~ We have point cloud generation from data sent over the network!!!

C# app is running separately from Unity that is compressing and sending the data to the socket server. Socket server is then broadcasting to all clients that same compressed data. The Individual Unity client(s) then decompresses and renders the point cloud form that data.

We nearly hit a major roadblock that could have caused significant delays: WebGL and C#-Socket.IO don’t work together! After building and testing the project in the browser, no point cloud data was coming through—big issue.

Fortunately, another team had already solved this problem, though the solution came at a small cost: Socket.IO Client for WebGL (https://assetstore.unity.com/packages/tools/network/socket-io-v3-v4-client-basic-standalone-webgl-196557#description).

This Unity asset converts C# Socket.IO calls from native Windows sockets to JavaScript sockets, making it compatible with WebGL. After implementing it (and rewriting a large part of the point cloud data pipeline), we now have point cloud generation working in the browser.

The video below shows the app in action, receiving point cloud data over the internet in real time. I had to lower the resolution of the point cloud to avoid performance issues in WebGL, and may reduce it further depending on testing results.

13 Sep 2024

NP: John-Mike came over today to test out some LED lights around the doorway. We have enough to start experimenting with the virtual > physical interaction.

9 Sep 2024

IH: For Kinect Data Compression and Streaming Progress, we’ve reached a key milestone in our project, where we now have a C# application that processes Kinect frames into depth and body index data. This data is compressed and transmitted over WebSockets via Socket.IO to Unity clients.

On the client side, the data is decompressed and used to generate a shared point cloud representation of humans captured by the Kinect system. By excluding color data, the uncompressed size per message is about 600-700 KB. After compression, the message size reduces to approximately 200-300 KB.

Currently, we're sending the compressed data at 10 frames per second, resulting in a transmission rate of 2-3 MB/s per client. Our goal is to keep total transmission around 4-5 MB/s per client, leaving us with about 1 MB/s to transmit additional network data, such as avatar positions and other client-specific updates.

Looking forward, we are exploring the possibility of implementing delta encoding. This would allow us to send only the differences between consecutive frames, significantly lowering the amount of data transmitted. Delta encoding could further reduce network saturation and optimize performance, ensuring smoother experiences as the system scales. This is an exciting step forward, and we are continuously improving both the efficiency and performance of our Kinect streaming system.

7 Sep 2024

IH: A little back and forth with me and ChatGPT figuring out how to compress Kinect frame data to send over the network: https://chatgpt.com/share/0a5c78a3-f6c8-4301-9496-4434439ed489

Well it is compressing down by ~50% from 8.5 to 4.3 MBs ... I'm still gonna need to compress that more.

If we sent the data without color 10 times per second this would be our overhead:

Note that doesn't include sending things like client location data for the avatars and anything else we might want to send. However its within acceptable limits:

2 Sep 2024

NP: I created a landing page for our (temp) Displacement.app website using a clip from my previous video. It's not ideal, but a placeholder for now -

31 Aug 2024

NP: We had a good catchup today. The last few months have been busy for each of us, Isaac was moving house, and Justin moved to Sweden! ... still, we're making progress.

Isaac had a major breakthrough with the Kinect > Unity pipeline, this is so exciting to see! ... and Justin has been building a mirror image of the Batch site as a Unity environment. Check out the videos/images below:

In Unity with dynamic resolution and Kinect floor aligned to 3d world floor

IH: When we have the camera setup on site I'll need a rough measurement in meters where the camera is relative to the bottom center of the door. This way we can set the in-game representation of the Kinect camera to the same position so the point cloud lands where they should.

8 Aug 2024

NP: We decided to go back to our original idea of using a Kinect, which somehow got put aside as we tried other options. Anyway, this is good news and could work work well, esp. now that we have a site where the Kinect/camera can be positioned on a nearby tree at an angle we specify.

IH: The Kinect2 can track up to six skeletons at one time. Each of these skeletons has 25 joints.. Camera Coordinates (X, Y, Z, W) The depth range of the Kinect is eight meters, but the skeleton tracking range is 0.5m to 4.5m, and it has trouble finding a skeleton at closer than 1.5m because of the field of view of the camera. So the cameraZ value will usually fall somewhere between 1.5 and 4.5. We could use this then instead of having to estimate joints we'd get data back that is legitimate 3d spatial coordinates.

31 Jul 2024

NP: We have the door! Blue delivered the door today ... after years of imagining this, it's becoming a reality and there's now a door in the middle of my living room!

21 Jul 2024

NP: Now that we have the site, we're starting to make progress again ... I ordered the materials for the doorway so Blue can start building it ... Isaac, Justin and I had a great catch-up this weekend and we're moving forward with building the new virtual environment (Justin), and working on the camera/point cloud interaction (Isaac). I've now ordered a camera which I'll install in my apt and then be guided by Isaac on how to get the live feed to him.

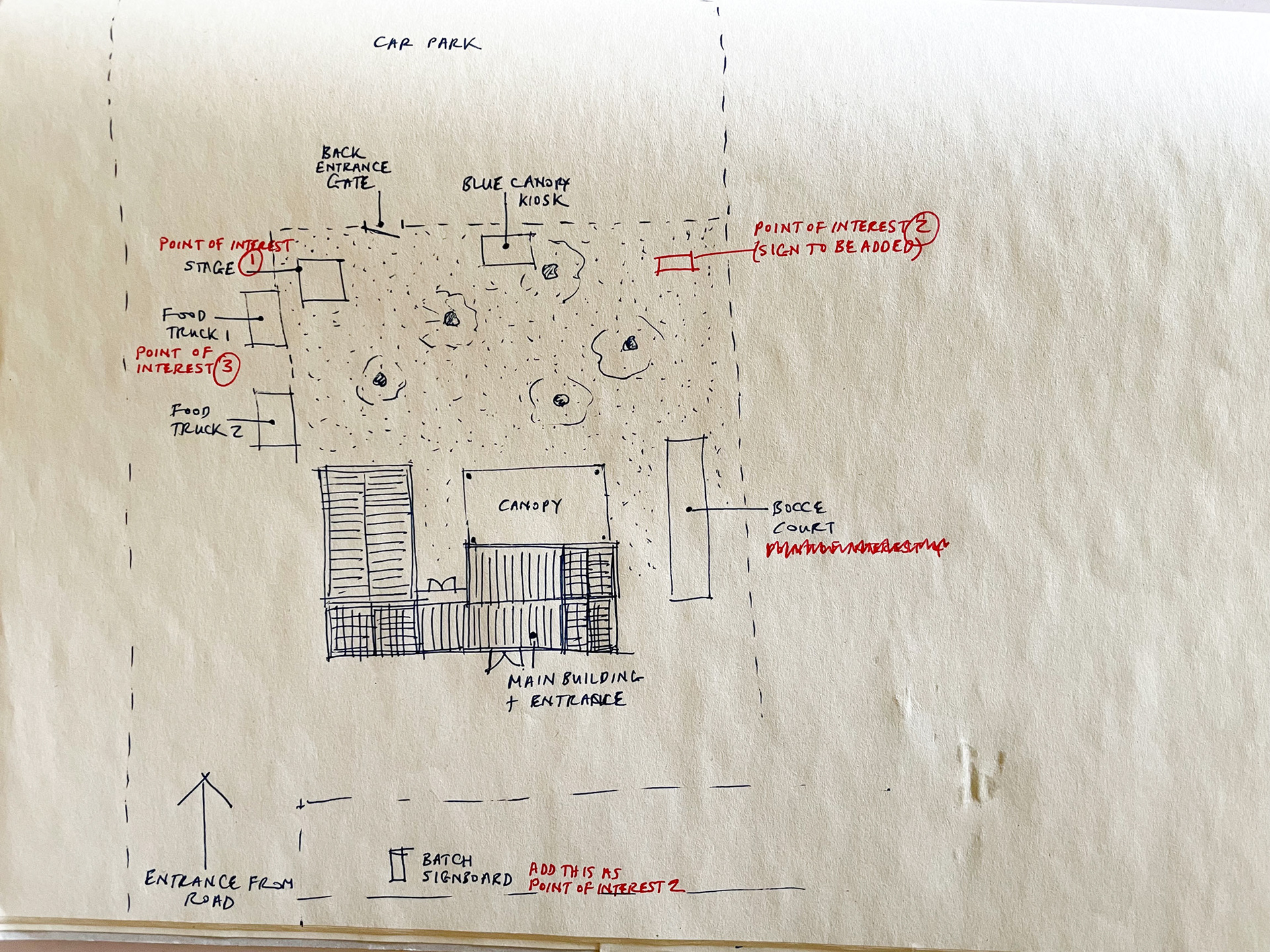

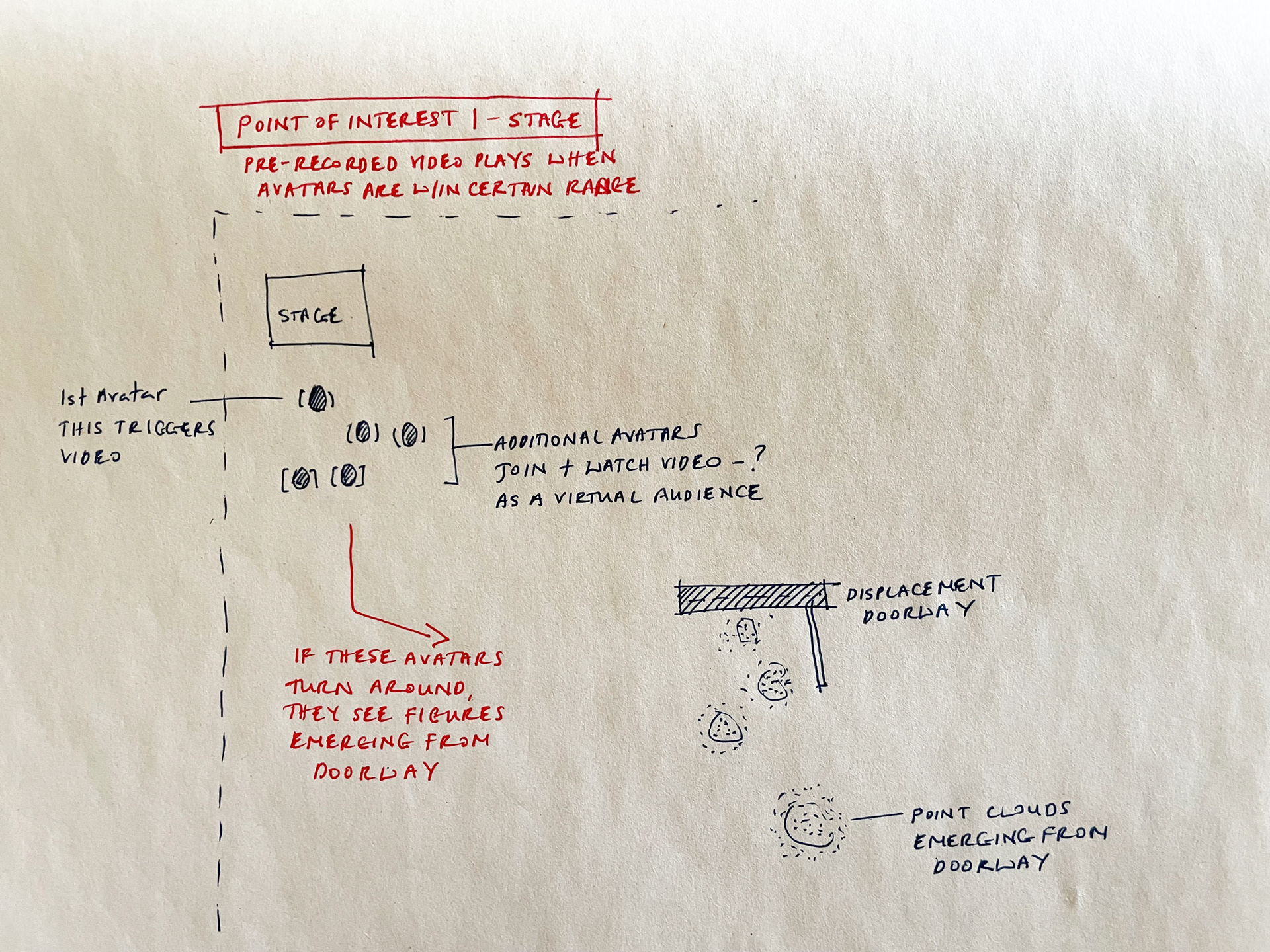

We also spent some time ideating around how we might create 'points of interest' in the virtual environment for visitors to interact with, learn a bit about Batch, and we're hoping listen to some local bands! ... some embarrassingly rough sketches I created -

18 Jul 2024

NP: There have been several new updates since the last post. First, we have a new team member: Blue! ... Blue is an amazing furniture designer and woodworker who is helping us build the physical doorway. Really excited to work with him.

The second update, also very exciting, is that we now have a new site for the project: Batch ATX in East Austin. Gabriel, the events manager, has been so lovely and I'm excited to discuss more with him when he's back in Austin at the end of the month. We already have ideas for how we can showcase some of their story and also regular music events as part of the virtual environment.

5 Jul 2024

NP: Alas, we didn't get the site downtown. I got a message from one of the board members at the City of Austin Arts & Culture with feedback. The primary reason they gave was 'Materiality', i.e. concerns about security, vandalism, and so on ... the plan is to continue moving forward!!!

The new strategy for securing a site is to rent space at a commercial or art venue. I've already made a start with this. Hopefully, more soon ...

12 Jun 2024

JS: Second iteration of the Unity environment with the doorway, mailbox, and wheat field -

3 Jun 2024

JS: First iteration of the Unity environment with the wheat field -

31 May & 2 Jun 2024

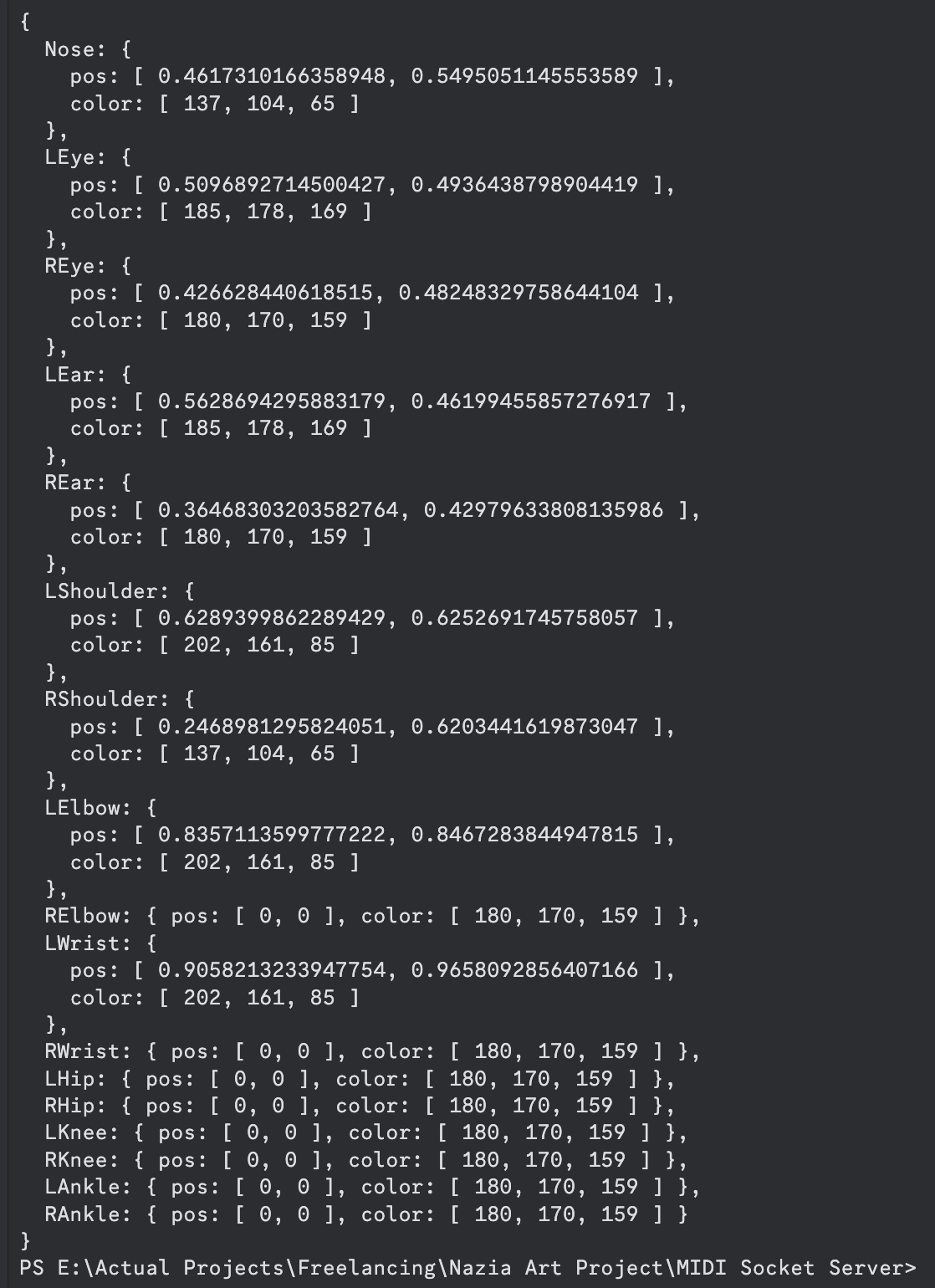

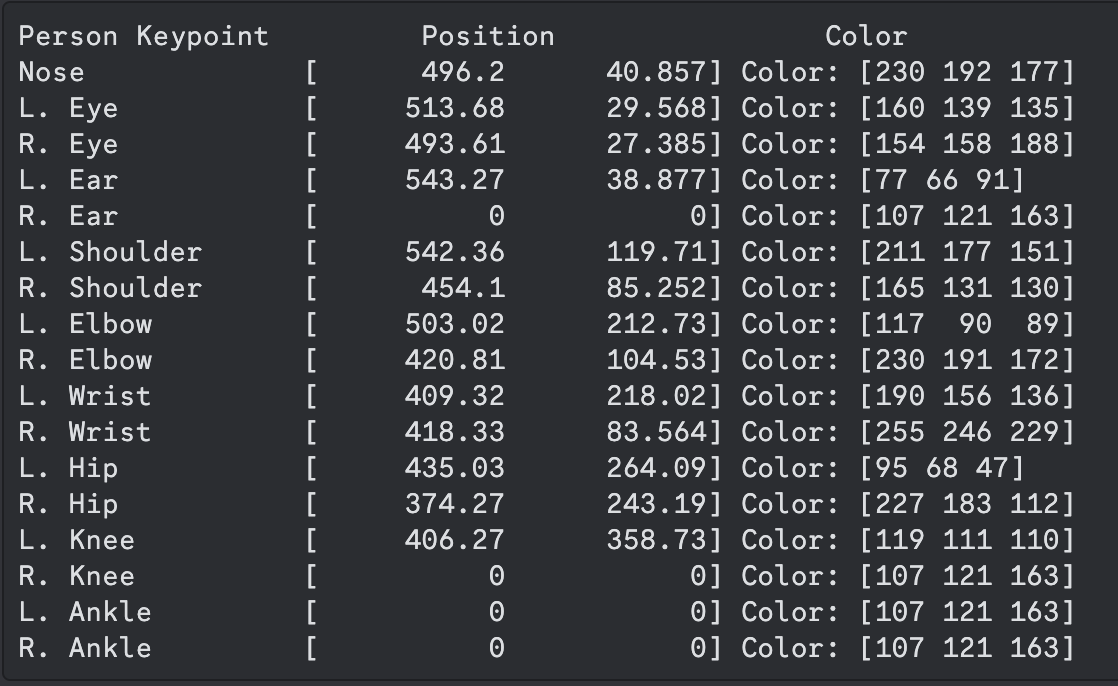

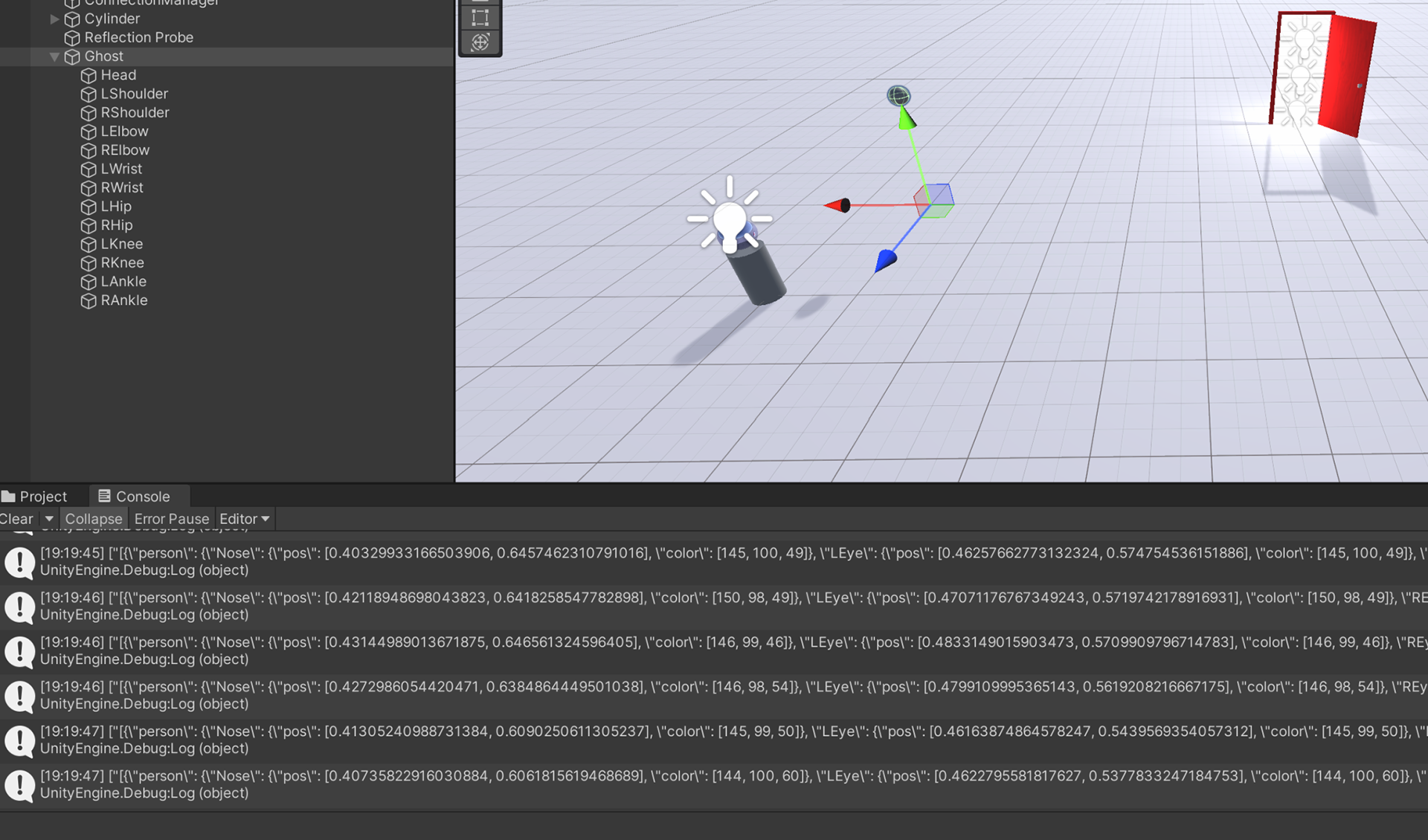

IH: I have pixel positions and color being pulled from keypoints! ... ... We have data from the Camera App to the Socket Server; and, from Camera to Server to Unity ... It's not the most beautiful but we have interactions in Unity!

31 May 2024

NP: Quick update to share that I connected with Blue! ... He's an amazing carpenter based in Austin and our newest collaborator. He'll be building the (physical) doorway. More on that soon ...

18 May 2024

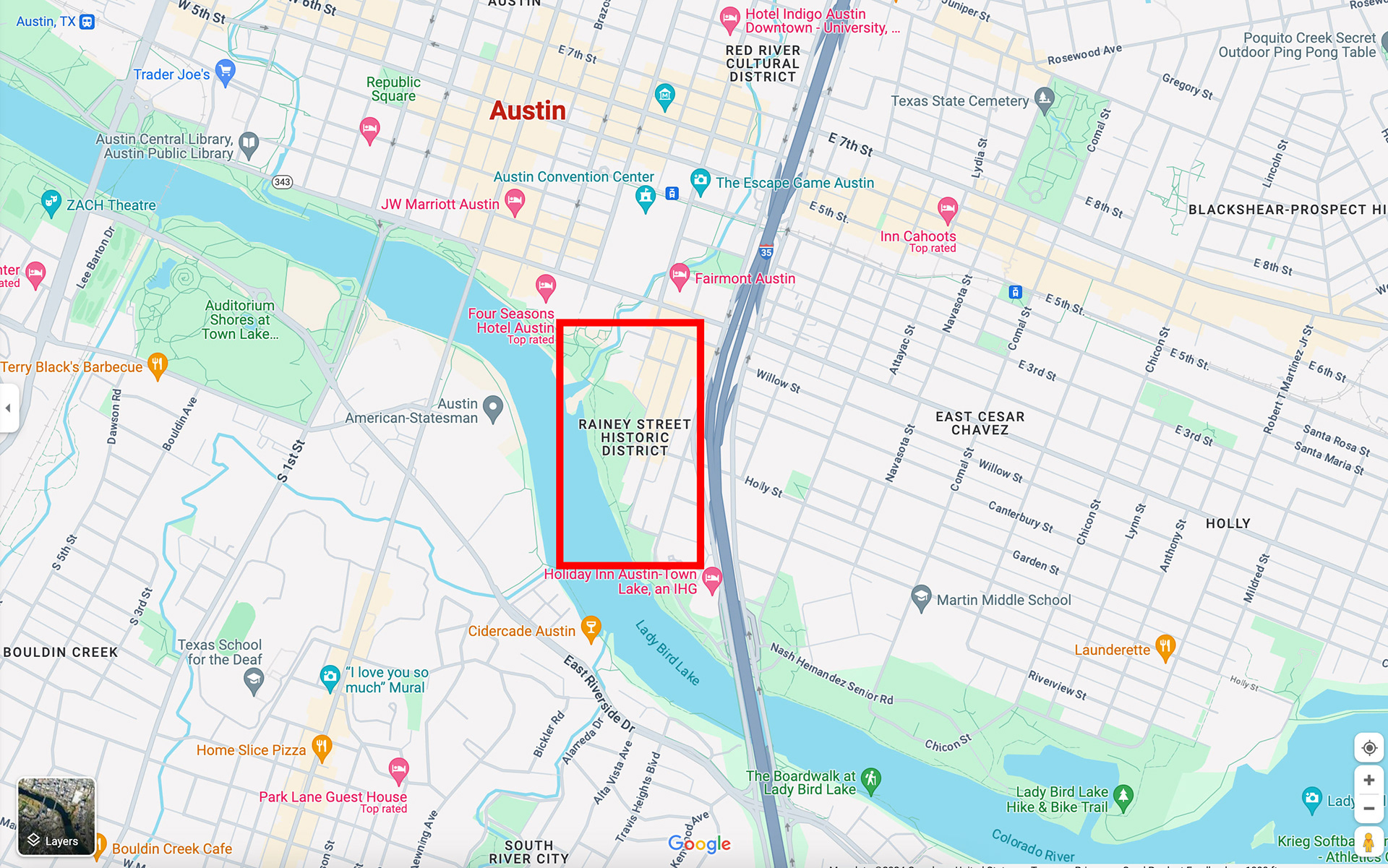

JS: One of the challenges in building a virtual 3d environment for Displacement, is to replicate the physical site as accurately as possible. In order to do this, we looked at different publicly available data from Google Maps, Google Earth, LiDar data, etc. I discovered that the City of Austin has an archive of public geospatial data, including AutoCad drawings here.

I used this info to start developing a 3d model. The site outline was isolated using AutoCAD data and then exported to 3ds Max and a basic 3d layout of the site was created. This 3d environment was then imported to Unity as a test. This initial test is now being developed as a working prototype.

15 Apr 2024

IH: As a first step to creating a presence in the virtual space reflective of the physical space we must be able to identify that physical presence. We want to be able to detect a person in the physical space and create some representation of them in the virtual space; like a point cloud. To that end we're using some libraries to allow a computer to "see" into a physical space, find people, and estimate their pose using. To do this we are using Python and the libraries OpenCV and YOLO (You Only Look Once) V8's pose estimation model. Next is seeing what kids of data we can extract and how we can leverage that data to create the virtual presence.

6 Apr 2024

NP: There were a couple of developments this week. The first: I heard back from the Trail Conservancy which is great news! They've approved the project on their side and are suggesting a location downtown at the Rainey Street Trailhead. The project would need approval from the City and that meeting is due to take place at the beginning of June. All encouraging although it's not yet final. The only drawback is that, with this timeline, Justin will have limited time to build the virtual environment.

[Link to doc: https://thetrailconservancy.org/projects/rainey-street-trailhead/]

Secondly, I had a great catch-up with my friend Sean in London. I'm really excited that he's agreed to help us in a consultative capacity. Please see the link to his website above.

He had some great suggestions that we're looking into. So far, we've been thinking of using a single camera for 3d capture but another approach might be to consider an i-Phone + app (that comes with built in libraries). This is one Sean suggested: https://record3d.app/

28 Mar 2024

NP: I had a really good call with Isaac today, in which we talked through how the camera/point cloud interaction would work. It helped to clarify - and simplify - our approach. We realized that actually, we don't have to use motion tracking at all. And also that we probably don't need a depth camera either! ... all we need is to register someone being 'present' in/across the doorway and an outline of their form.

Isaac suggested using a regular camera + Open CV libraries to generate a point cloud. We also don't the need exact location of a person, just an approximation which we can use to create the point cloud, and define at what point this begins to 'dissipate' in the virtual environment. I think this will be adequate for this prototype.

I'm sure there are more detailed consideration that will arise, but this is a good starting point. Also, Isaac suggested we use a Mini PC, which seems to solve different problems that came up before: power, integration w/ Arduino, camera, libraries, etc. ... plus, this is a platform he's confident working in.

More to come ...

22 Mar 2024

IH: Here are some preliminary thoughts on solving the pipeline for the addition of a camera.

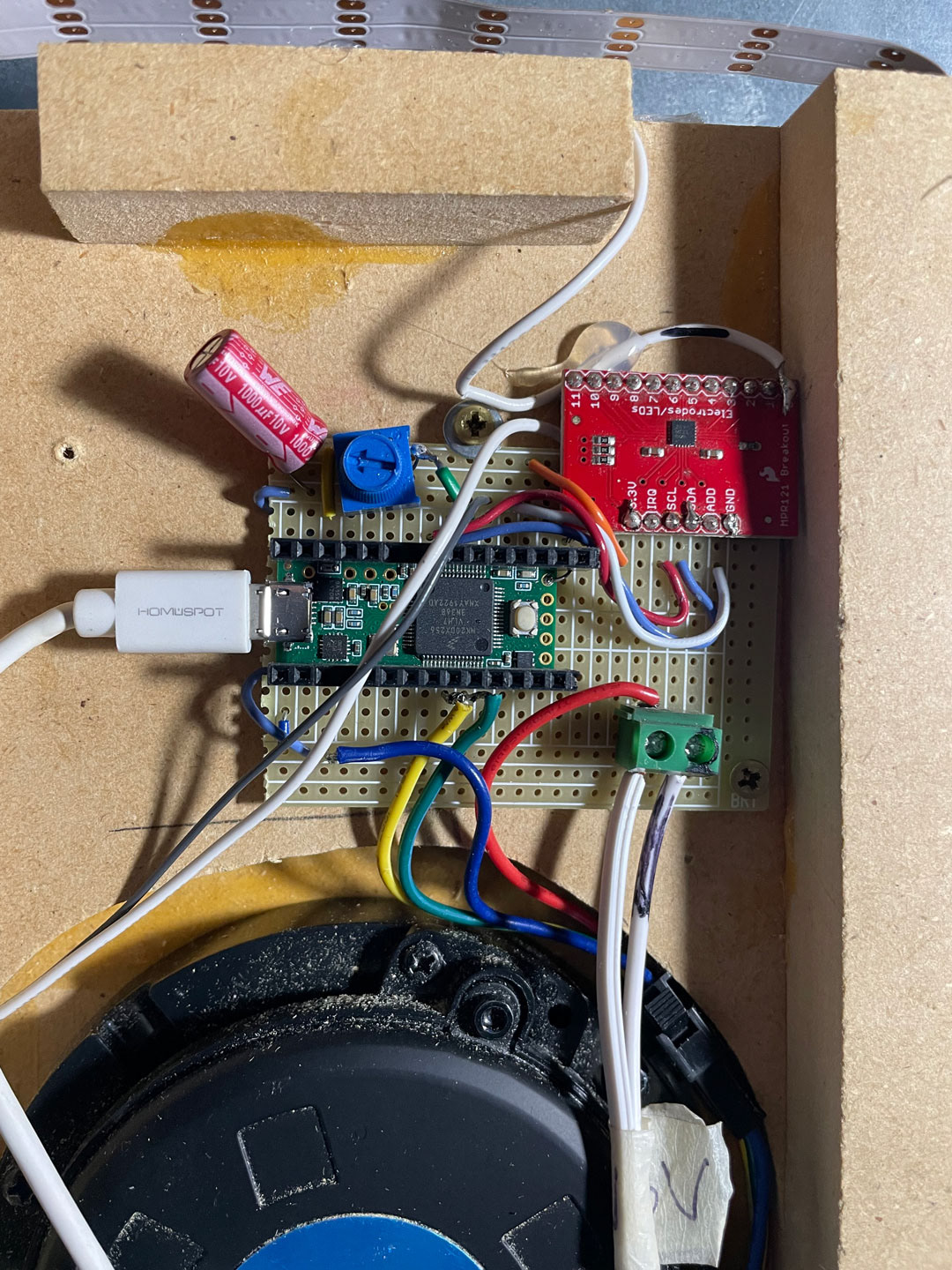

Sounds like we’ll need to incorporate a Pi which isn’t too surprising given the lack of power an Arduino has. That said we still need the Arduino to interact with the panel since that’s really what its for.

Here is what I’m imagining in the abstract:

The Pi can be connected to the Arduino and run the broadcaster app and the camera app.

The camera app can use some libraries to track faces / people.

The bounding box and pixel resolution can be used to estimate the position of the human and spawn a point cloud / visual in Unity.

The Pi will do the calculations and just send over the data needed to estimate the location in Unity.

This prevents us from having to send over one or more images over the network.

So things we may have to solve for is:

Pi processing power, what kind of FPS can we get?

How accurately can we estimate position based on an image?

If we are using a Pi we can likely use a Kinect instead of a camera and Justin’s expertise may be able to help in this regard.

If we use a Kinect I think we’ll have to do a lot less estimating.

In any case we’ll likely be displaying a generically human shaped point cloud in Unity, probably nothing high detail.

Just some starting though as I do more research ...

20 Mar 2024

NP: The Displacement project has essentially 2 interactions:

1/ PHYSICAL > VR: Camera is triggered when someone walks over the threshold of the physical doorway, which generates a point cloud form in Unity;

2/ VR > PHYSICAL: the physical doorway will have LED lights built in, which light up when an avatar walks through the virtual doorway in the virtual environment.

So far, we've been working on 2/ - the last test showed the physical panel light up from a remote interaction in Unity [see entry for 16 Mar]

The Physical > VR interaction, however, has raised a lot of questions, which we've yet to resolve. At this point, we're still considering options: should we use Arduino or Raspberry Pi; is it best to go with Kinect (which has pre-built libraries) or other depth cameras. Also, who would build this? ... discussions ongoing ...

16 Mar 2024

NP: It was so great today to see the second prototype working! ... for this one, we were looking at creating an interaction from the Virtual to the Physical environment ... John-Mike updated the original Arduino code to trigger the panel to light up from a remote interaction in Unity; and, Isaac created both the Unity test environment and code to communicate between the two. Here's a screen recording from earlier today -

22 Feb 2024

NP: We're really pleased to welcome Justin to the team! he's going to be helping us build the 3d environment in Unity ... more soon.

18 Feb 2024

NP: Woo hoo! ... we got the first prototype working. The touch panel in my apt is now interacting with the virtual doorway in Unity, in real-time. Very exciting! ... here's a screen recording and Isaac sharing an overview of the process -

9 Dec 2023

NP: The app Isaac created is working and picking up the Midi data from the panel, here's a short screen recording -

IH: Phase two is now updating that app to send the data to my server. That means I also need to modify the server to listen for and receive that data ... More to come soon!

7 Dec 2023

IH: My next step now is to modify the existing simple express and socket io server I'm running to take in Midi input direct from a device and broadcast that message via the socket.io server. There is going to be some trial-and-error work as well to get the server to relay the on/offs in a manner that we like for Unity to adjust the door's brightness smoothly.

Essentially the pieces will align together like so: Nazia will run the app on a laptop, or some small computer, connected to the panel's output. I'm making that app now to take the output from the panel and send it over the internet to the server application running on my website. That then broadcasts the midi messages to any Unity client connected to the site. Right now I'm working specifically on modifying the server and building the app Nazia's machine will run.

More soon!

6 Dec 2023

NP: After weeks of other commitments I'm happy to be back on this project ... JM wrote the code for outputting the MIDI data from the touch panel but I had trouble uploading it. He came over today and got it resolved. Really excited it's working now! ... the next step will be sending this to Isaac to use in Unity. The goal is to have the interaction with the physical panel mirrored - in real-time - in the virtual environment.

4 Oct 2023

NP: The physical touch panel responds to proximity and the light is bright/dim depending on how near/far someone is. Q: Will the code capture a fluid range of proximities-? ... so the virtual panel mimics the real panel?

JM: Yes the value it outputs will be the same as the LED brightness.

IH: ... my general understanding is we should be able to make it match the real output of the panel. The light on the panel is likely responding to the same MIDI value that will be passed to Unity via JM's code. I can take that value, mostly likely a number between 0 and 127 or a number between 0.0 and 1.0 and map that to the door's brightness in Unity.

I've got a VR project up and running and am able to walk around a space and interact with the Mailbox and a 3d Cube with physics. I can upload a vid or two show casing the current state a bit later and then move on to the next step which is getting some external interactivity and the doorway implemented.

2 Oct 2023

IH: I'm excited to start making Unity and panel interactivity a reality. I've used the Unity library JM recommended for receiving MIDI input from a local device. To enable the panels to communicate with Unity, we'll need to transmit their output over the internet. This will likely require a socket server to listen for panel output and relay it to Unity.

Here's how the communication pipeline should ideally work:

panel -> local program (listening for output and connected to the socket server) -> Socket server -> Unity client(s)

If you provide the code, I can work on adding connectivity to a socket server. For testing purposes, I have a domain and can set up a locally port-forwarded server at home to act as the server (free of charge).

This approach offers several advantages:

- We can intake output from network-connected MIDI devices into Unity.

- We can create a small proof of concept to demonstrate the feasibility of the larger project.

- We can establish the groundwork for building the larger project.

30 Sep 2023

NP: As a non-tech person, this is my diagram showing my understanding of how the concept would work. Interacting with the panel in my apartment, would trigger the virtual doorway (in the Unity environment, compiled by Isaac). This means every time I touch the panel, somewhere in the virtual realm, the doorway lights up!

30 Sep 2023

IH: As an additional update I also have a very small demo scene reading in MIDI input from Unity so I know for a fact I can make that happen. Next I'm going to proof of concept the WebSocket server idea. as outlined in the previous email to make sure that is feasible. I've worked with WebSocket in other personal projects, and I found a WebSocket wrapper library for Unity so should be suuuuper easy. More to come later!

The attached video is just a very small demo of the above as a proof of concept.

I have a NodeJS express / socket server running. Express to host the very basic webpage and socket to communicate to unity.

Unity connects to the socket server and updates the website with the cube's color.

The MIDI piano shows MIDI input working

and lastly the button on the webpage allows as external source (think of the webpage as a stand in for the mailbox / camera / etc) to have an affect in Unity (changing the cube to green; which the piano connect do.)

IH: The only missing pieces are:

Getting the VR part up an running - There is built in stuff in Unity to make that piece easy enough. (Gotta dust off and charge my quest 2).

Making sure I can use the data from the hardware and the others are on board and can interface with such a setup with their hardware.

Once we have those, we have all the pieces working and it's just about building out the setup and the software.

It seems small, this demo. However, it proves we can put everything together to make the vision happen!

29 Sep 2023

IH: I've recently started working with Unity and have been delving into the intricacies of communication between hardware and software. In my exploration, I'm considering the implementation of a socket server to act as a bridge between all our software and hardware components, unless, of course, this concept has already been explored or put into practice.

Here's a breakdown of how the socket server would function:

For Physical on-site components (IR):

Register Person: This function would add a person to the server's data and transmit it to our VR clients, keeping track of this entity until it's removed.

Update Tracked Position: It involves updating the IR entity data on the server, which would then be sent to our Unity VR client, thereby updating virtual representations.

Remove Tracked Person: Removing a person from the server's data would prompt Unity to respond by removing their representation.

Message From Mailbox: Messages from the IR mailbox would be sent to the server for Unity to display to all or selected clients.

IR to VR Interaction: Messages with IR to VR interaction ID and parameters would be transmitted to the server.

Early concept sketch

For VR Clients:

Update VR User: This function would handle the updating of VR user positional information and other relevant parameters, facilitating communication between clients and the IR space.

VR to IR Interaction: Messages containing VR to IR interaction ID and associated parameters would be sent to the server.

The socket server would also emit messages such as:

VR to IR Interaction: Ensuring that IR components respond appropriately to VR space inputs using the provided ID and parameters.

IR to VR Interaction: Ensuring that VR components respond to IR space inputs using the provided ID and parameters.

26 Sep 2023

NP: The original concept includes a doorway with sensors (on a physical site), which are triggered when a person walks through it. The sensor data is then used to create a virtual persona (as a point cloud) seen moving through the virtual doorway in the virtual environment (in real time).

For the proof of concept it makes sense to simplify the concept and use an alternative touchpoint to replace the doorway in the first instance. JM pointed out that touch sensors are tricky to use outside as humidity has a big effect on them and it might be hard to make a reliable on/off switch. This is one of the reasons I thought we might use the ‘Touch Panel’ (previously created with JM) which would function as this new touchpoint, and it’s already set up and installed in my apartment. The touch panel uses a proximity sensor to trigger increasingly bright light as someone moves close to it.

Initial questions included: If someone touches the panel to trigger light - how can we capture that data in order to use it in Unity? It could be a binary response, did someone touch the panel, yes/no.

We looked at sending MIDI data from the touch panel into unity [https://github.com/keijiro/Minis]

IH: The only mystery to me (which I think JM will help answer) is how to get the touch panel interaction over the internet. JM may have an API setup already or we may need to build one. Either way once we have the data pipeline figured out, we can make that no problem!